Web scraping is a technique used to collect publicly available data from websites and convert it into structured formats for analysis. It plays an important role in competitive research, market analysis, and data-driven decision-making across many industries. This article explains how web scraping works, why it matters in today’s market, and how it is applied in real-world scenarios.

1. Understanding Web Scraping and its importance in today's market

Web scraping is increasingly recognized as a core method for collecting large volumes of publicly available data from the web. Rather than serving as a one-off technique for extracting information from individual pages, it now plays a central role in structured data collection workflows used by businesses, researchers, and digital teams across many industries.

As organizations place greater emphasis on data-driven analysis, the open web has become one of the most dynamic and comprehensive sources of real-world information. Websites continuously publish data related to pricing, user behavior, reviews, news, and market activity, making web-based data essential for understanding changing trends and competitive environments. Web scraping enables this information to be gathered in a consistent and scalable manner, transforming unstructured web content into usable datasets.

The growing adoption of artificial intelligence, big data analytics, and automated decision systems has further increased the demand for reliable web data. These systems depend on large, frequently updated datasets to function effectively, and manual data collection is no longer practical at scale. As a result, web scraping is often integrated into automated pipelines designed to operate continuously and with minimal human intervention.

At the same time, modern web environments have become more complex. Websites increasingly implement anti-bot mechanisms, rate limits, and access controls, which introduces new challenges for large-scale data collection. Effective web scraping today therefore requires not only an understanding of basic extraction techniques, but also the ability to manage sessions, accounts, and browser environments in a stable and sustainable way.

Understanding this broader context helps clarify why web scraping has become an essential capability in today’s market, and sets the foundation for exploring its core principles, automation strategies, and real-world applications in the sections that follow.

1.1. The basics of Web Scraping

Web scraping serves as a bridge between businesses and the vast amount of data available on the internet. By leveraging automation, organizations can extract relevant information from websites efficiently, without relying on time-consuming and error-prone manual processes. This approach allows data to be collected at scale and transformed into formats suitable for analysis and decision-making.

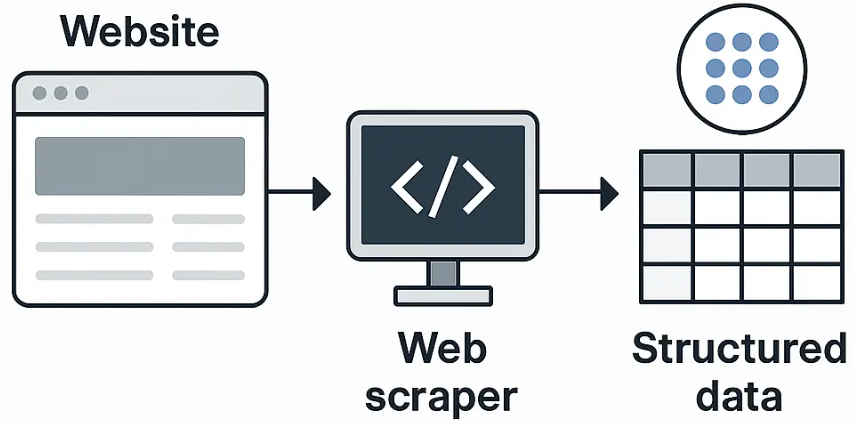

At its core, web scraping involves several fundamental steps:

- Data extraction: Automated tools send requests to web pages and retrieve specific information based on predefined parameters.

- HTML parsing: Once the content is collected, scrapers analyze the HTML structure of web pages to locate and isolate relevant data points.

- Output formatting: The extracted data is then converted into structured formats such as spreadsheets, databases, or JSON files for further use.

The ability to automate these steps represents a significant evolution in data collection, enabling raw web content to be transformed into actionable insights in a consistent and repeatable way.

Web scraping vs. web crawling

Web scraping is often confused with web crawling, but the two concepts serve different purposes. Web crawling is primarily concerned with discovering and navigating web pages by following links, typically to build an index of available content. Search engines rely heavily on crawlers to understand website structures and identify new or updated pages.

Web scraping, in contrast, focuses on extracting specific data from known web pages. While crawling answers the question “Which pages exist?”, scraping addresses “What information can be extracted from these pages?”. In many real-world systems, crawling and scraping are used together, but their objectives and outputs remain distinct.

Web scraping vs. APIs

Another important distinction is between web scraping and the use of application programming interfaces (APIs). APIs provide structured, officially supported access to data, often with clear documentation, usage limits, and predefined data fields. When an API is available and sufficiently comprehensive, it is usually the most stable and compliant option.

However, APIs may expose only limited datasets, restrict access, or be unavailable for certain platforms. In such cases, web scraping becomes a practical alternative when data is publicly accessible on web pages but not fully available through APIs, or when greater flexibility is required.

When to use web scraping and when to use APIs

Web scraping is most suitable for scenarios involving publicly visible data that changes frequently or spans multiple pages and sources, such as competitive pricing, product listings, customer reviews, or news content. APIs are better suited for situations that require long-term stability, guaranteed data structures, and official access channels.

Understanding these differences helps clarify the role of web scraping as a complementary data collection method rather than a replacement for crawling systems or APIs. This foundation is essential for exploring automation strategies and challenges in the following sections.

1.2. The role of automation in Web Scraping

Automation plays a central role in modern web scraping by enabling data to be collected faster, more consistently, and at a much larger scale than manual methods. Instead of relying on human interaction, automated scraping systems can continuously access websites, extract predefined data points, and process information with minimal intervention. This significantly reduces time and operational effort, especially when dealing with frequently updated or high-volume data sources.

By automating repetitive tasks such as page navigation, data extraction, and formatting, organizations can maintain up-to-date datasets while minimizing human error. Automation also allows scraping processes to be standardized, making results more reliable and easier to integrate into downstream analytics or business intelligence workflows.

Automation at different levels

Automation in web scraping can be implemented at varying levels of complexity, depending on the scope and objectives of the project:

- Simple scripts: Basic automation using lightweight scripts to extract data from a limited number of static pages. This approach is suitable for small-scale tasks or one-time data collection.

- Scheduled scraping: Automated jobs that run at predefined intervals to collect updated data, such as daily price checks or periodic content monitoring.

- Large-scale scraping pipelines: Advanced systems designed to collect data across thousands of pages, platforms, or accounts. These pipelines often involve distributed workloads, error handling, logging, and data validation to support continuous operation.

As scraping moves from simple scripts to large-scale pipelines, automation becomes essential for maintaining efficiency and scalability.

However, increased automation also introduces new challenges. Highly automated scraping behavior can appear repetitive and non-human, making it easier for websites to detect and block such activity. Frequent requests, predictable patterns, and reused browser characteristics can trigger anti-bot systems, rate limits, or account restrictions.

To operate reliably at scale, automated scraping systems must therefore manage multiple technical factors, including IP rotation, browser fingerprint consistency, and session handling. Without proper control over these elements, even well-designed scraping workflows may become unstable or short-lived. This is why modern web scraping increasingly depends on controlled browser environments and identity management, rather than automation alone.

Despite its advantages, web scraping does come with challenges. Not all websites permit web scraping, and some implement measures to block scrapers. Businesses must tread carefully to ensure they comply with legal and ethical standards while executing web scraping jobs.

Moreover, relying solely on scraped data without proper validation may lead to inaccurate conclusions. Therefore, combining scraped data with other research methodologies can significantly improve overall decision-making and strategy formulation.

2. Challenges and ethical considerations of Web Scraping

Despite its advantages, web scraping presents a range of technical, legal, and ethical challenges that organizations must carefully manage. As websites increasingly protect their data and regulations around data usage become more stringent, scraping is no longer a purely technical task but one that requires strategic planning and responsible execution.

2.1. Technical challenges in web scraping

From a technical standpoint, one of the primary difficulties of web scraping is website variability. Page structures may change without notice, JavaScript-rendered content can complicate extraction, and inconsistent HTML markup may lead to incomplete or broken datasets. These issues require continuous monitoring and maintenance of scraping workflows to ensure data accuracy and stability.

In addition, performance limitations such as request delays, server timeouts, and network instability can affect large-scale scraping operations, especially when data needs to be collected frequently or in real time.

2.2. Anti-scraping and detection mechanisms

Many websites actively deploy anti-scraping and bot detection systems to control automated access. Common measures include CAPTCHA challenges, browser behavior analysis, and bot protection services such as Cloudflare. These systems are designed to identify non-human traffic patterns and restrict or block suspicious requests.

Another widespread practice is IP blocking and rate limiting, where excessive or repetitive requests from the same source are temporarily or permanently denied. Without proper management of access patterns, IP addresses, and browser environments, scraping activities may quickly become unstable or ineffective.

2.3. Legal and ethical considerations

Beyond technical barriers, web scraping raises important legal and ethical questions. Not all websites permit automated data collection, and some explicitly prohibit scraping in their terms of service. Ignoring these policies can expose businesses to legal risks or service disruptions.

The robots.txt file also plays a key role in defining acceptable automated access by indicating which sections of a website are intended for bots and which are restricted. While not legally binding in all jurisdictions, robots.txt is widely regarded as an ethical standard that responsible scraping practices should respect.

In cases where scraped data includes personal or identifiable information, data privacy regulations such as GDPR introduce additional compliance requirements. Even publicly available data may be subject to rules governing how it can be collected, stored, and processed.

2.3. Data accuracy and validation

Finally, relying solely on scraped data without proper validation can lead to inaccurate or misleading conclusions. Web data may contain duplicates, outdated information, or contextual noise that affects analysis quality. To mitigate these risks, scraped data is often combined with other research methods, such as surveys, first-party data, or manual verification, to improve reliability and support sound decision-making.

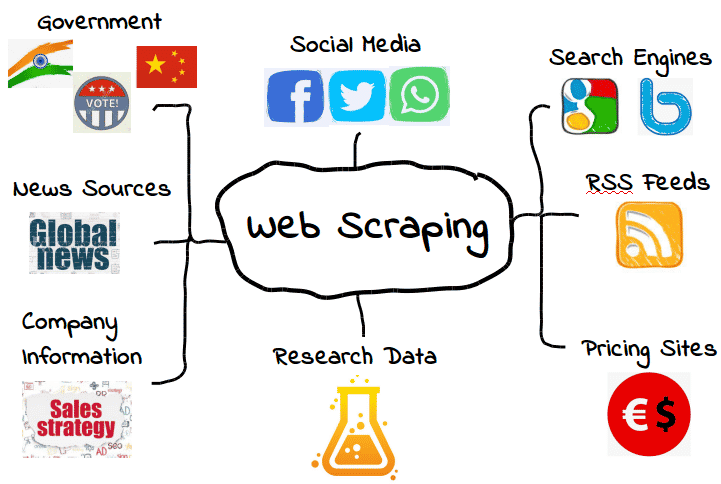

3. Applications of Web Scraping across various industries

Web scraping is not just about collecting data from websites. Its real value lies in how that data is processed, analyzed, and used to support informed decision-making. Across different industries, web scraping helps transform raw web data into insights that drive strategy, operations, and product development.

3.1. E-Commerce and competitive analysis

The e-commerce industry has revolutionized how businesses operate, with web scraping playing a pivotal role in shaping marketing strategies and optimizing decisions.

Scraping product data from competitor websites allows businesses to understand pricing structures, promotional tactics, and inventory levels. This intelligence is crucial for formulating competitive pricing strategies that maximize profits while attracting customers.

Furthermore, by analyzing customer reviews and ratings from various platforms, businesses can gain insights into consumer preferences, leading to better product development and marketing direction.

Integrating web scraping into e-commerce workflows enables companies to maintain a pulse on market trends and adapt swiftly, ensuring sustained growth in an ever-evolving landscape.

How it works in practice

Web scraping is typically used as a continuous monitoring process rather than a one-time data pull. Businesses collect product prices, availability, and promotional information from competitors’ websites, then store this data over time to build historical price datasets. These datasets allow teams to compare pricing trends, detect sudden changes, and adjust their own pricing or promotions accordingly.

However, eCommerce platforms are among the most protected environments against automated data collection. Frequent requests, repetitive browsing patterns, and identical browser fingerprints can quickly trigger anti-bot systems, leading to IP blocking or account restrictions. To maintain stable data collection, scraping operations often require IP rotation, session management, and isolated browser profiles to mimic real user behavior and reduce detection risks.

3.2. Market research and consumer insights

Quality market research is vital for business success. Web scraping empowers organizations to obtain accurate and extensive data that drives informed decision-making. By harnessing web scraped data, businesses can analyze consumer trends effectively, determining which products resonate with target audiences.

For example, monitoring social media sentiment about specific brands or products helps companies gauge public perception and identify potential areas for improvement. With structured data obtained through web scraping, businesses can optimize their entry points into new markets and refine their offerings.

Moreover, web scraping supports competitor monitoring, keeping track of competitor launches, promotions, and market positioning. Such insights allow businesses to innovate and strategize accordingly, remaining ahead of the curve in a competitive marketplace.

Turning raw data into consumer insights

In market research, web scraping is widely used to collect unstructured data from product reviews, discussion forums, and social media platforms where consumers openly share opinions and experiences. Compared to structured datasets, this type of data provides richer context but also introduces significant noise, including spam, duplicate content, and irrelevant conversations.

To extract meaningful insights, scraped data must be cleaned, filtered, and normalized before analysis. Once processed, it can be combined with sentiment analysis techniques to identify common pain points, emerging preferences, and shifts in consumer perception over time. This approach allows businesses to move beyond surface-level metrics and gain a deeper understanding of how customers actually feel about products, brands, and market trends.

3.3. Real estate data collection

The real estate sector is another area where web scraping shines. Agents and brokers increasingly rely on web scraping tools to populate their databases with up-to-date property listings and rental information. This practice allows them to develop comprehensive market analyses that inform their business decisions.

By collecting and analyzing vacancy rates, pricing trends, and property types, real estate professionals can make informed predictions about the market's trajectory. Additionally, understanding rental yields and property values through scraped data equips agents to provide accurate assessments to clients.

Web scraping helps real estate agents combat competition by ensuring they have the latest data at their fingertips, enabling them to serve clients effectively and efficiently.

Long-term data collection in real estate

Web scraping is commonly used to gather structured information such as property prices, locations, listing status, and historical changes over time. By tracking how listings appear, disappear, or change in price, businesses can analyze market trends, estimate demand, and identify potential investment opportunities at both local and regional levels.

Real estate websites often deploy strong anti-bot mechanisms to protect high-value data, including request rate limits, behavioral analysis, and advanced bot detection systems. Since meaningful insights in this sector rely on long-term and recurring data collection, scraping operations must be designed to run on a scheduled basis while maintaining stable access. This makes consistency, session control, and identity isolation critical factors for reliable real estate data extraction.

3.4. News monitoring and industry analysis

Timely news updates can significantly impact businesses across sectors. Companies need to stay informed about developments in their industry to pivot correctly when necessary. Web scraping provides a powerful solution for monitoring news sources and aggregating critical reports.

By automating the process of gathering articles and news snippets, businesses can create detailed summaries of emerging trends, regulatory changes, and market shifts. This capability is particularly relevant for companies heavily influenced by current events or those requiring constant vigilance regarding their reputation.

Furthermore, web scraping can streamline the research process for professionals seeking insights into industry reports, white papers, and analyst opinions. By compiling all relevant information in one place, businesses can enhance their strategic planning efforts and mitigate potential risks.

Ensuring data quality in news monitoring

In news monitoring, web scraping can be implemented in both real-time and scheduled models, depending on how quickly information needs to be captured. Real-time scraping is often used for breaking news and trend detection, while scheduled scraping supports long-term industry analysis and content tracking over time.

A key challenge in this context is avoiding duplicate content, as the same news may appear across multiple sources or be syndicated with minor variations. To maintain data quality, scraped content must be deduplicated, categorized, and enriched with relevant tags such as topic, industry, publication date, and source credibility. These steps allow organizations to transform large volumes of news data into structured intelligence that supports competitive analysis, risk monitoring, and strategic planning.

4. Harnessing the power of Web Scraping for review analysis

Customer reviews represent one of the richest forms of unstructured data available online. Unlike numerical metrics, reviews capture opinions, emotions, and detailed experiences that are difficult to quantify without systematic processing.

Web scraping enables businesses to collect this feedback at scale and convert scattered comments into structured datasets. When combined with data cleaning and analytical techniques, scraped review data can reveal sentiment trends, recurring issues, and opportunities for improvement that may not be visible through traditional research methods.

4.1. Collecting user feedback from social media platforms

Social media is a treasure trove of user-generated content, including reviews and comments about products or services. Through web scraping, businesses can collect this valuable feedback systematically, allowing them to identify strengths and weaknesses.

For example, a fashion retailer can scrape reviews from platforms such as Instagram, Twitter, and Facebook to assess customer sentiments regarding specific products. Analyzing this data reveals prevalent themes and establishes connections between customer experiences and purchasing behavior.

Through these insights, businesses can enhance their products and improve their branding strategies, ultimately fostering customer loyalty.

Practical challenges of scraping social media platforms

Scraping user feedback from social media platforms presents a unique set of challenges compared to traditional websites. Most platforms require users to be logged in, enforce session-based access, and impose strict limits on how much data can be viewed or collected from a single account within a given timeframe. These restrictions are designed to prevent automated behavior and protect platform integrity.

As a result, large-scale social media scraping often requires managing multiple accounts, maintaining active sessions, and operating across isolated browser environments. Using different browser profiles helps simulate real user behavior, reduce correlation between accounts, and minimize the risk of detection or account suspension. Without proper profile and environment management, social media scraping workflows tend to become unstable and difficult to scale.

4.2. Competitive sentiment analysis

Understanding public perception of competing brands is equally important. Web scraping enables companies to collect and analyze reviews from various sources, helping them discern consumers' sentiments toward competitors.

Tracking sentiment trends over time

Competitive sentiment analysis becomes significantly more valuable when sentiment is tracked over time rather than analyzed as isolated snapshots. By continuously scraping reviews, comments, and mentions related to competing brands, businesses can map how public perception shifts in response to product launches, pricing changes, customer service incidents, or marketing campaigns.

Scraped data can then be combined with natural language processing (NLP) techniques to categorize sentiment and compare Brand A against Brand B across consistent timeframes. This approach helps identify not only which brand performs better overall, but also when and why sentiment gaps emerge, enabling more informed strategic decisions based on real-world consumer feedback.

This competitive sentiment analysis allows businesses to pinpoint areas where rivals excel and where they fall short. For example, if a competitor receives consistent praise for their customer service, this insight can inspire businesses to elevate their own service standards, potentially capturing dissatisfied customers looking for alternatives.

By embracing the insights gleaned from review analysis, businesses can foster a proactive approach to addressing public perception and enhancing their market position.

4.3. Driving product development decisions

With insights derived from web scraping, businesses can use consumer feedback to drive their product development decisions. Identifying common complaints or suggestions empowers teams to innovate based on actual consumer needs rather than assumptions.

For instance, a tech company could analyze user feedback related to battery life issues in a smartphone model. By recognizing this pain point, the company can focus on improving battery performance in future iterations, increasing customer satisfaction and brand loyalty.

In essence, leveraging web scraping for review analysis fosters a culture of responsiveness within organizations, allowing them to evolve continually according to consumer demands.

From insights to product decisions

When review data is systematically collected and analyzed, it can directly influence product development decisions. Recurring complaints about specific features, usability issues, or missing functionality often signal areas where improvements are needed. Conversely, consistently positive feedback can validate existing design choices or highlight strengths worth reinforcing.

In this workflow, web scraping acts as the foundation of a broader decision-making pipeline: data is collected from reviews, transformed into structured insights through analysis, and ultimately translated into concrete actions such as feature updates, pricing adjustments, or changes in product positioning. This insight-to-action loop allows product teams to base decisions on real user feedback rather than assumptions or limited surveys.

5. Conclusion

Web scraping has become a strategic asset for businesses seeking to operate in data-driven environments. Across industries such as eCommerce, real estate, market research, and media monitoring, it enables organizations to collect timely information, uncover patterns, and make informed decisions based on real-world data rather than assumptions.

As scraping operations scale, success depends not only on extraction techniques, but also on how data is collected responsibly and maintained over time. Automation, ethical considerations, and accurate data interpretation all play a critical role in ensuring scraped data remains reliable and actionable. In addition, large-scale web scraping increasingly faces technical barriers such as IP blocking, behavior analysis, and anti-bot systems.

To support stable and long-term scraping workflows, businesses often require infrastructure that can manage multiple accounts, isolate browser environments, and reduce detection risks. Antidetect browser solutions like Hidemyacc help address these operational challenges by providing separate browser profiles and flexible IP management, enabling data collection processes to run more securely and consistently. When combined with a well-designed scraping strategy, such tools allow organizations to unlock the full potential of web data while minimizing disruption.

Built for Large-Scale Web Scraping

Support long-term, automated data collection with isolated browser environments and flexible identity management.

Explore HidemyaccIf you have any further questions, comments, or suggestions, feel free to contact us via Telegram, Skype, or Facebook Messenger support.

- AI web scraping tool free: Which are the best tools for your projects?

- Scraping eBay: Tools, tips, and best practices for beginners

- What is Antidetect Browser? The privacy tool that beats VPNs

6. FAQ

Is web scraping legal?

Web scraping itself is not illegal, but its legality depends on how and where it is used. Some websites explicitly prohibit automated data collection in their terms of service, while others allow limited access. Businesses should always review website policies, respect robots.txt guidelines, and avoid collecting personal or sensitive data without proper authorization to remain compliant with legal and ethical standards.

What is the difference between web scraping and web crawling?

Web crawling focuses on discovering and indexing web pages, often for search engines, while web scraping is designed to extract specific data from those pages. Crawlers map the structure of the web, whereas scrapers target defined data points such as prices, reviews, or listings for analysis and decision-making purposes.

Why do websites block web scraping activities?

Websites may block scraping to protect server resources, prevent data misuse, or maintain competitive advantages. Common blocking mechanisms include IP rate limiting, CAPTCHA challenges, browser fingerprint analysis, and behavior-based detection systems. These measures are especially common on eCommerce, social media, and real estate platforms.

How can businesses reduce the risk of being detected while scraping data?

Reducing detection risk typically involves managing request frequency, rotating IP addresses, maintaining realistic browsing behavior, and isolating sessions across different browser environments. Using separate browser profiles helps prevent correlation between scraping activities, making large-scale and long-term data collection more stable.

When is web scraping a better option than using APIs?

Web scraping is often used when APIs are unavailable, limited, expensive, or do not provide the required level of detail. APIs are ideal for structured and permission-based access, while scraping offers flexibility for extracting publicly available data that cannot be accessed through official interfaces.

Can web scraping support long-term business strategy?

Yes. When implemented responsibly, web scraping supports continuous market monitoring, competitive analysis, sentiment tracking, and product optimization. The key is consistency and data quality—scraping should be part of an ongoing data pipeline rather than a one-time task.